Exostellar has joined the Vultr Cloud Alliance, bringing a unified heterogeneous AI infrastructure orchestration platform to teams running workloads across Vultr’s global cloud data center regions. The partnership enables customers to utilize Exostellar’s advanced orchestration and optimization capabilities to schedule, place, and optimize AI workloads across various GPU types on Vultr, thereby improving utilization, reducing overhead, and establishing a consistent operational model for distributed compute.

Meet Exostellar and see how they enhance AI operations on Vultr

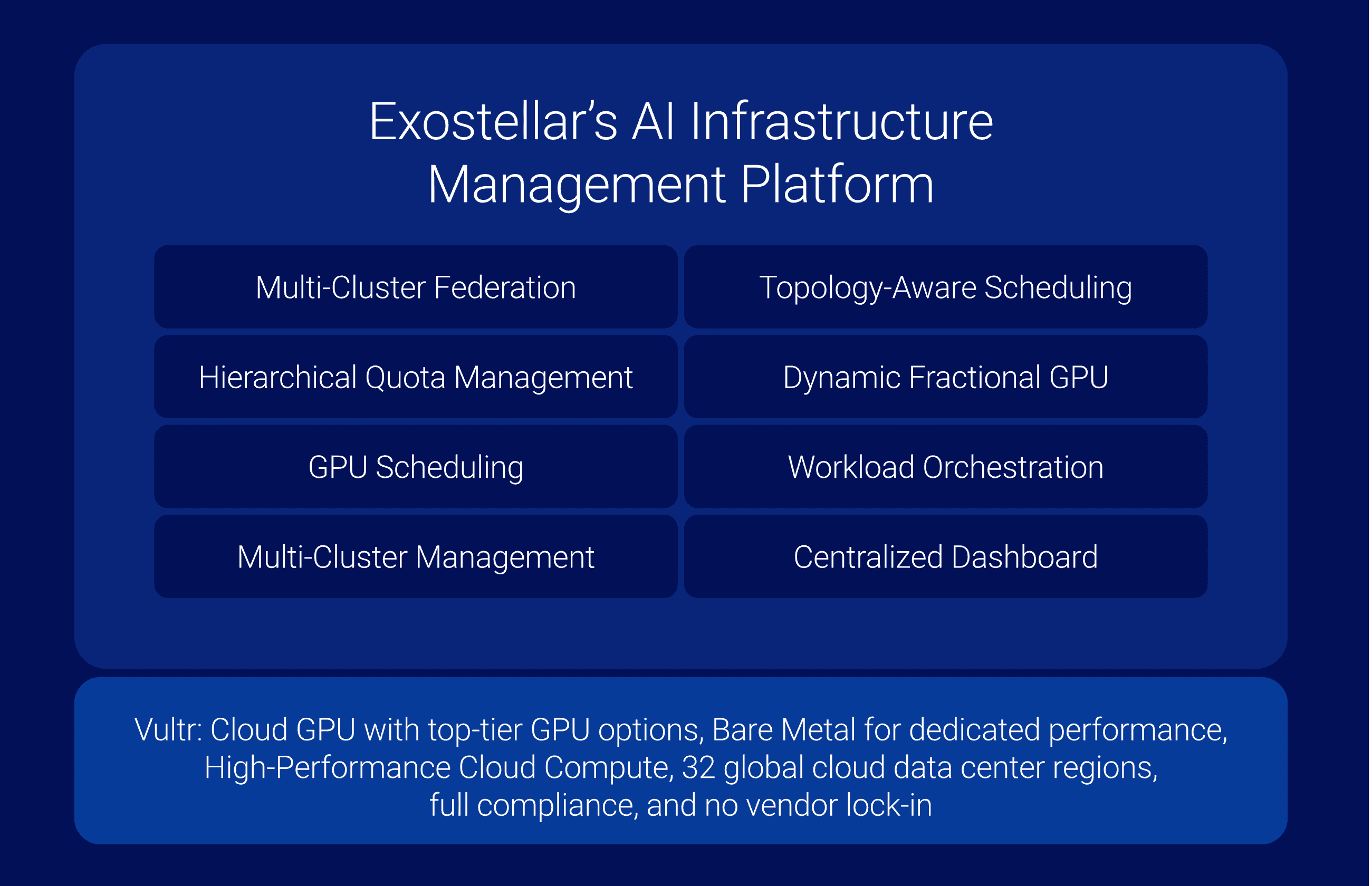

Exostellar is an AI infrastructure orchestration platform built for enterprises, research groups, and platform engineering teams that run distributed GPU environments. The platform unifies heterogeneous GPU resources into a single, shared pool using multi-cluster federation, allowing teams to view and manage all Vultr regions through a single control plane. Once unified, it utilizes topology-aware scheduling to ensure workloads are placed where they fit best based on memory needs and specific workload patterns. To govern this shared environment, Exostellar employs hierarchical quota management, giving administrators precise control over resource sharing and queuing to ensure fair access.

Finally, to maximize efficiency, the platform supports flexible fractionalization, allowing several inference jobs or AI agents to run on the same device without wasting resources. These features deliver substantial gains, with many teams achieving 14 times higher efficiency, more than 50% cost savings, and 10 times more compute availability. Vultr is partnering with Exostellar to give customers an efficient, consistent, and scalable way to operate mixed GPU workloads across Vultr’s global cloud data center regions.

"The future of AI depends on seamless, distributed compute," said Kevin Cochrane, Vultr's CMO. "Exostellar’s platform, paired with Vultr’s global GPU infrastructure, allows teams to optimize every workload without compromise, unlocking performance that was previously difficult to achieve.”

“Modern AI Infrastructure requires precise, intelligent control of compute. By pairing Vultr’s global GPU infrastructure with Exostellar’s fractional GPU allocation, topology-aware scheduling, multi-cluster federation, and hierarchical quota management, we deliver a unified execution layer that treats heterogeneous GPUs as one optimized pool. This ensures workloads, from dense inference to distributed training, receive exactly the resources they need with minimal waste and consistent performance.” — Onur Aksoy, Chief Revenue Officer, Exostellar

The joint solution: Integrated AI infrastructure

Our partnership combines Exostellar’s orchestration platform with Vultr’s high-performance cloud infrastructure. This includes Vultr Cloud GPU with access to top-tier GPUs from multiple vendors, bare metal for dedicated performance, and scalable cloud compute, all delivered with full compliance and no vendor lock-in.

Exostellar integrates with Vultr by identifying available compute resources across regions and managing them as a single resource pool. This unified approach allows teams to manage both NVIDIA and AMD GPUs from a single control plane, eliminating hardware silos. Its scheduler places workloads on the most suitable GPU resources and adjusts allocations as demand changes, providing teams with a consistent and efficient way to run AI workloads on Vultr.

Real workloads that benefit from coordinated GPU management

- Training and inference on shared GPU capacity: Training and inference workloads can pull from the same unified GPU pool across Vultr regions. Idle resources are used immediately, reducing wait times and improving throughput.

- Mixed GPU workloads across regions: Teams running workloads that perform differently on various GPU types can rely on Exostellar to allocate the most suitable hardware without manual intervention.

- High-density inference and AI agents: Smaller models and agent workloads can share accelerators instead of reserving full devices, increasing model-serving density and lowering cost.

- Research, simulation, and HPC workloads: Research groups gain access to Vultr Cloud GPU with consistent scheduling and predictable performance during variable demand periods.\

This partnership provides businesses with a single control plane to manage heterogeneous GPU resources across Vultr’s cloud data center regions, enhancing utilization and reducing delays through more accurate workload placement. The combined capabilities make it easier for infrastructure, research, and platform teams to run AI workloads on Vultr efficiently while reducing operational effort.