This is a guest post authored by Lablup Inc.

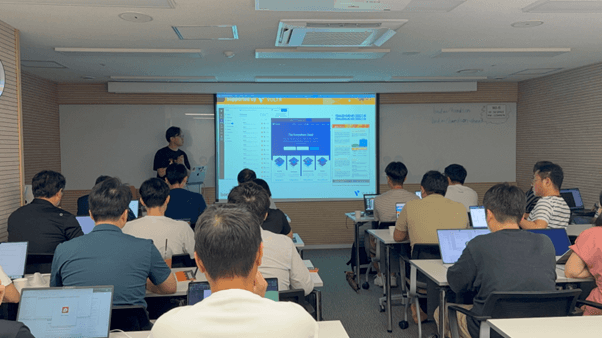

Lablup Inc. is committed to make Backend.AI accessible and easy to use for as many people as possible. To achieve this, we host regular Backend.AI Hands-on workshops—two to four times a year—where participants can dive in and experience Backend.AI.

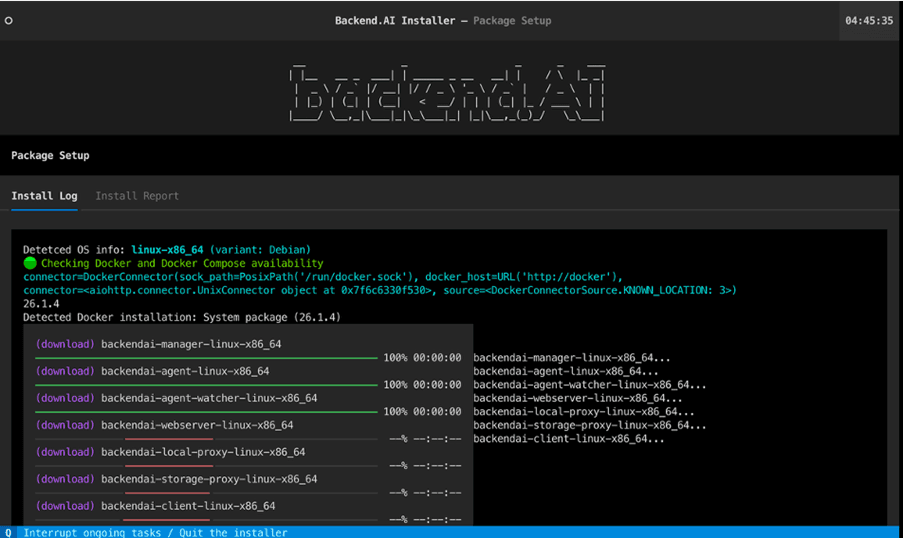

Our Hands-on program is a practical, experience-driven course. Participants install a minimal Backend.AI environment and a lightweight LLM on a single instance, then run the model services themselves. Using their own laptops with Ubuntu Linux, attendees download the latest Backend.AI binaries from our official website and install everything in an all-in-one setup for maximum convenience.

One of the biggest challenges in running these workshops is providing each participant with a high-performance development environment, such as a GPU instance. GPU resources can be costly and difficult for individuals to access, so many participants prefer remote environments. While some companies use their own internal GPU resources, these are often limited in scale and not flexible enough to meet workshop needs.

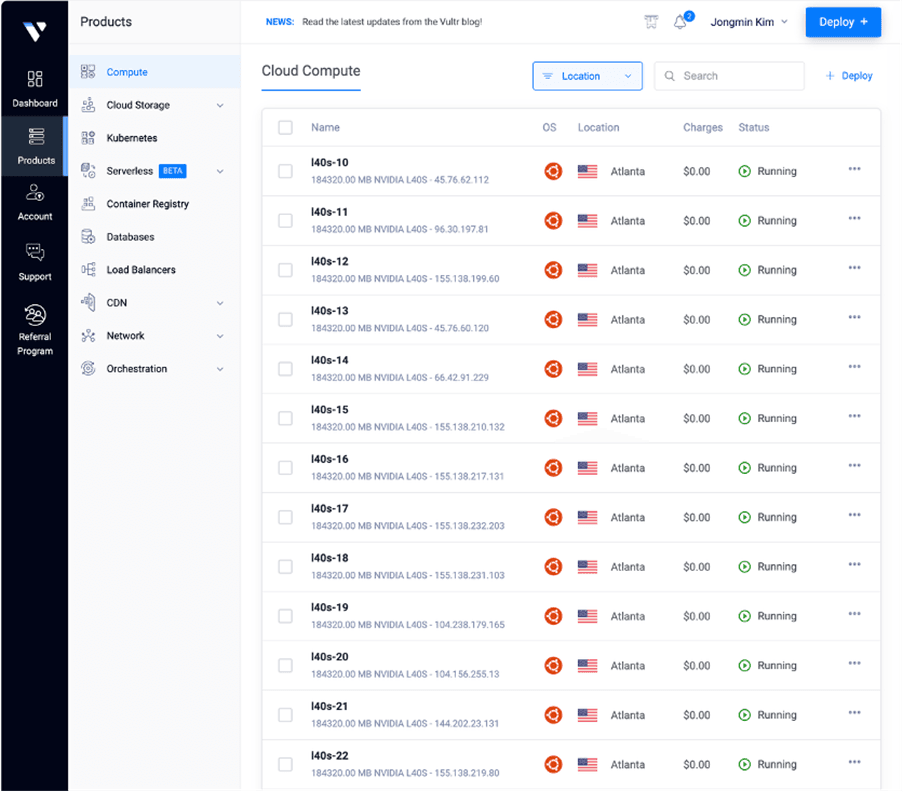

To solve this, Lablup co-worked with Vultr, who generously provided over 30 L40s GPU instances for our hands-on session. Our team pre-configured each instance so that every participant could access a unified, ready-to-go GPU environment. This allowed everyone to work independently and ensured the smoothest, most efficient workshop experience yet.

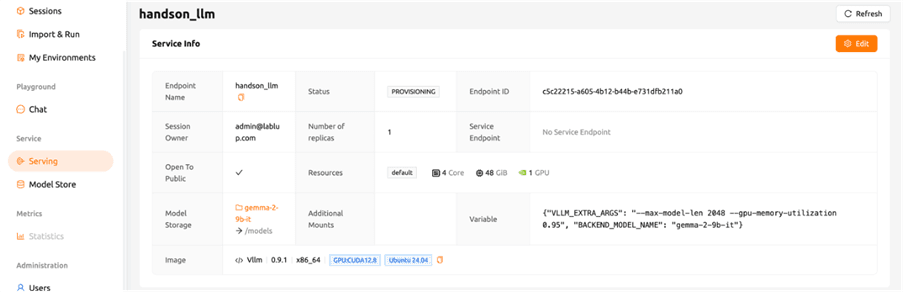

We were delighted to see so many participants join us. Everyone successfully installed Backend.AI and even ran the Huggingface google/gemma-2-9b-it model on their own instances. More than just installing Backend.AI, participants had the chance to think about how to use Backend.AI more effectively in real-world scenarios and how to bring AI into their own workflows.

Our decision to leverage Vultr’s cloud GPU resources was driven by our mission: to make high-performance AI infrastructure accessible and practical for everyone. Recently, Lablup has also worked with Vultr to run a variety of benchmark projects using Backend.AI on Vultr Cloud GPUs powered by NVIDIA’s latest B200 hardware. We’ve explored how Vultr Cloud GPUs and Backend.AI can create synergy, especially in generating synthetic data for domain-specific LLMs.

Read more about these projects.