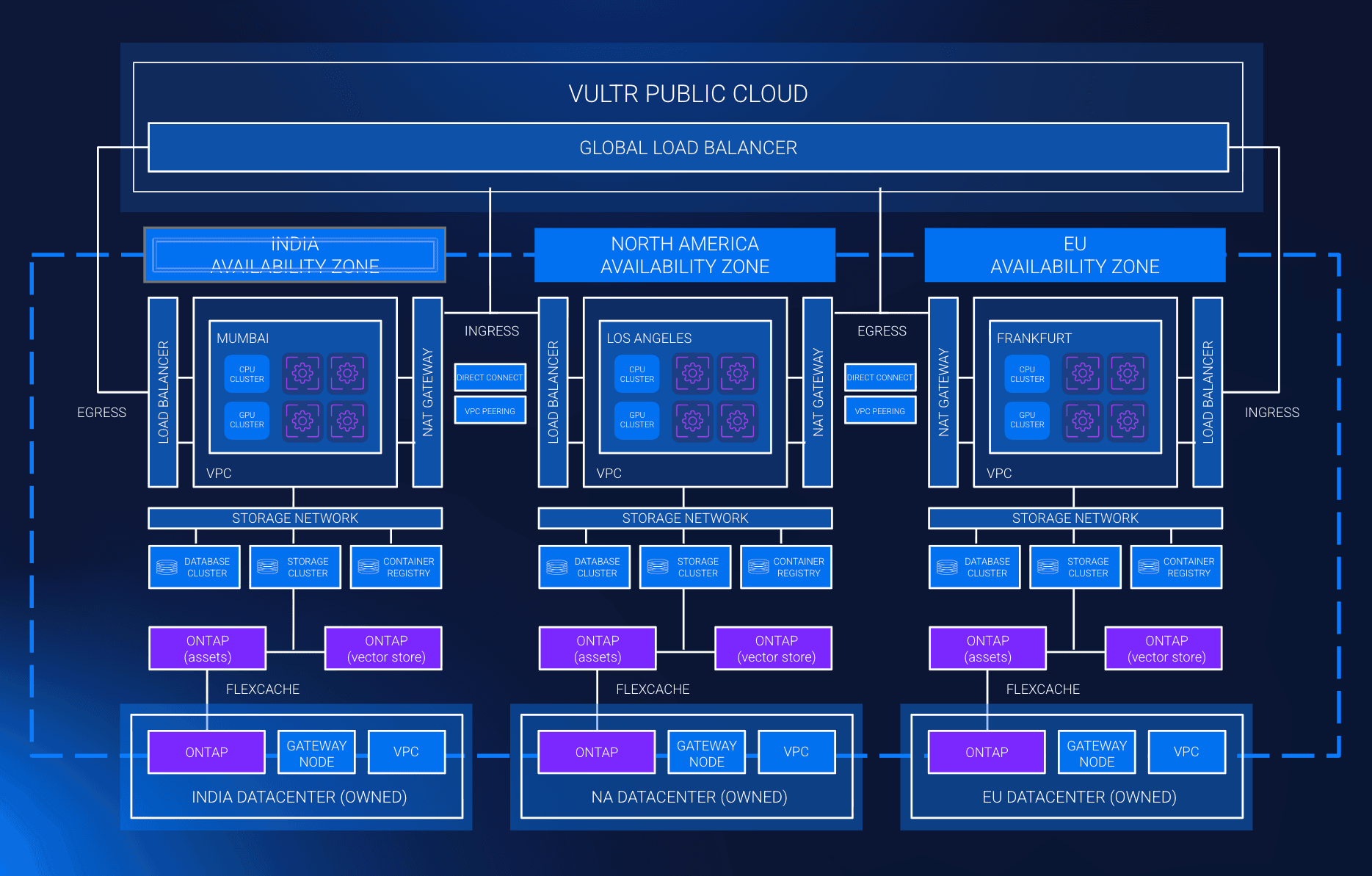

Vultr, AMD, and NetApp, three members of the Vultr Cloud Alliance, are collaborating on a new reference architecture designed to help organizations run data-intensive and AI workloads across hybrid and sovereign cloud environments, without increasing operational complexity.

This collaboration combines Vultr’s global and sovereign cloud regions, AMD Instinct™ GPUs with the AMD ROCm™ open software platform, and NetApp ONTAP data management. Together, the three teams aligned on a clear objective: create a reproducible architecture that centralizes distributed data, supports high-performance compute and AI, and maintains control over data locality and compliance.

A unified hybrid-cloud data platform built for AI and enterprise

The Reference Architecture introduces a hybrid cloud pattern that brings data from multiple on-premises NetApp environments together into a single cloud-based environment hosted on Vultr.

On-premises NetApp systems can now bring their data together in one place on Vultr. Data from multiple sites is replicated into a single NetApp ONTAP environment in the cloud, where it becomes immediately accessible to Vultr compute and GPU resources. This creates a central and consistent view of all datasets, making it easier to run analytics, build AI models, or support continuity and recovery workflows without disrupting existing operations.

AI and accelerated computing workloads run on AMD Instinct GPUs, supported by the AMD Enterprise AI Suite and the AMD ROCm software platform. These tools provide optimized libraries, model execution frameworks, and performance-tuned components that streamline training, tuning, and inference.

This architecture can also be extended with AMD AI Blueprints, which provide validated patterns for building AI pipelines, data workflows, and model lifecycle processes on Vultr infrastructure integrated with NetApp ONTAP. These blueprints provide teams with a clear path for scaling AI development while maintaining data organization and accessibility across various environments.

Because the design can be deployed within Vultr sovereign cloud regions, organizations maintain the data residency and compliance controls required for regulated sectors while benefiting from cloud scale compute.

Built for performance, control, and long-term flexibility

This collaboration demonstrates how enterprises can modernize hybrid cloud and AI operations without redesigning their existing on-premises environments. NetApp ONTAP provides the trusted replication and governance layer. Vultr delivers flexible and cost-efficient cloud compute with sovereign region support. AMD accelerates AI with Instinct GPUs, ROCm, the AMD Enterprise AI Suite, and the AMD AI Blueprints program.

The result is a practical and validated architecture that supports AI workloads, analytics pipelines, enterprise applications, and disaster recovery, while maintaining predictable and secure data mobility and governance.

Talk to us at Gartner IOCS

If you would like to explore the AMD, NetApp, and Vultr reference architecture or discuss hybrid cloud data strategies, AI workloads, or sovereign cloud deployments, meet the Vultr team at Gartner IOCS. Our team will be available throughout the event to walk through the architecture and discuss how it supports analytics and enterprise-scale workloads.